Intuit: bringing a human touch to AI generated art

Andvanced AI-powered tool based on gesture recognition that helps creators

The project was created during 48 hours hackathon HackZurich 2020 working on Logitech challenge and answering the question what is the future of digital creation and human-computer interaction.

Problem

The workflows of digital artists are becoming increasingly more complex. Meanwhile, AI-assisted tools and even AIs creating art themselves are emerging.

So where is a place for artists in this process?

How we can bring more of a human in the process of creation?

Is there any place for human touch and intuition in the world of controllers, toggles, software, and hardware?

Solution

With Intuit we want to bring back the incredible power of human intuition and human touch to the digital creation process. We do this by removing the complex user interfaces, sliders, inputs, and buttons and enabling the human's most powerful crafting tool: the hand.

We use the latest in AI computer vision to detect the nuances of the creator's hand positions and movements and use that as input for advanced AI tools that are able to generate music, images, and 3D assets.

The feedback is immediate. Rather than to use abstract interfaces, you can simply change your hand posture, the AI changes the output accordingly and you can see what feels right.

This is a whole new way for creators to shape their digital art.

Product presentation

Who can use it

Pretty much everyone who wants to use hands and gestures in their creative process.

Digital artists: all the possible colors and shapes on your fingertips

Musicians: mix, edit, and speed up with a single swing

Photographers: tune your photos faster and finer by using gestures

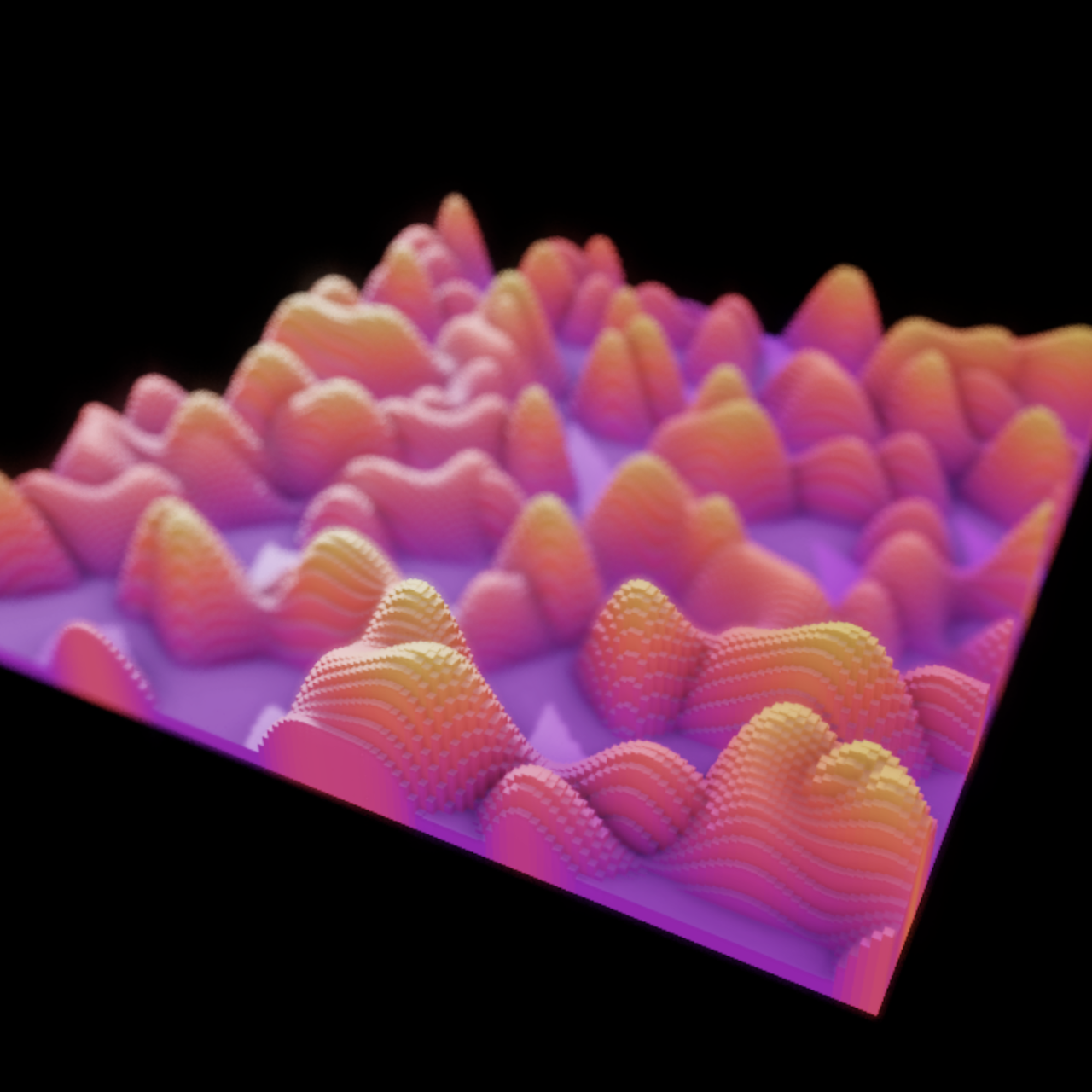

3D artists: endless opportunities to explore textures and materials

How we built it in 48 hours

- We use standard browser APIs to capture your webcam footage

- Video frames are then fed to the TensorFlow Handpose JS model

- The model returns a prediction of each hand joint as 3D coordinates

- We use React as our primary front-end library, which hooks up predictions to the application state

- Then using a mathematical sigmoid function we map received coordinates into abstract values on a scale from 1 to 100

- Normalized data are used as inputs for any kind of AI or digital generators

- We have built a little prototype to control the size and color of a Kawaii Cat (check out the demo)

- We created a Product page to show the various use cases of the technology as a product demo for Logitech

The entire solution runs in the browser.

Check out our devpost page to lear how it was built.

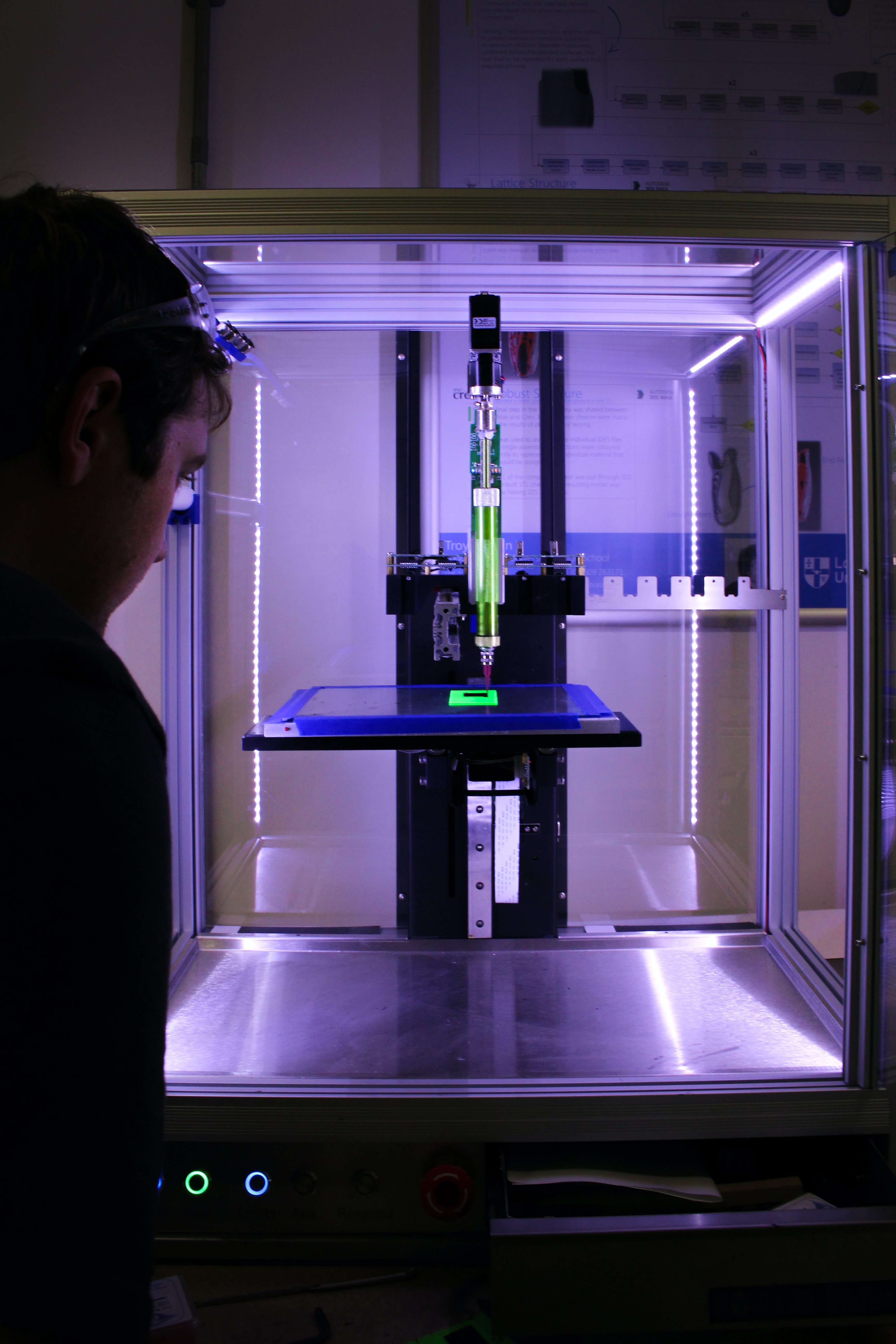

Photo by SwapnIl Dwivedi on Unsplash

Photo by Rob Wingate on Unsplash

© Anna Lukyanchenko 2024 — all rights reserved